Introduction

Clustering similar data points is a fundamental task in unsupervised machine learning that involves grouping data with similar characteristics. Artificial Intelligence (AI) frameworks offer powerful tools and algorithms for clustering, enabling businesses to discover patterns, identify outliers, and gain insights from unstructured data. In this blog post, we will explore the top five AI frameworks for clustering similar data points, empowering organizations to extract valuable information from large datasets.

Why use AI frameworks for clustering similar data points?

- AI frameworks implement advanced clustering algorithms that efficiently group similar data points, reducing the computational resources and time required for the task.

- AI frameworks can handle large datasets with numerous data points, making them suitable for clustering tasks involving big data.

- AI algorithms use mathematical techniques to accurately identify patterns and similarities in the data, resulting in more precise and meaningful clustering results.

- AI frameworks automate the clustering process, removing the need for manual intervention and allowing for continuous analysis of data to uncover new clusters as the dataset evolves.

Related Reading:

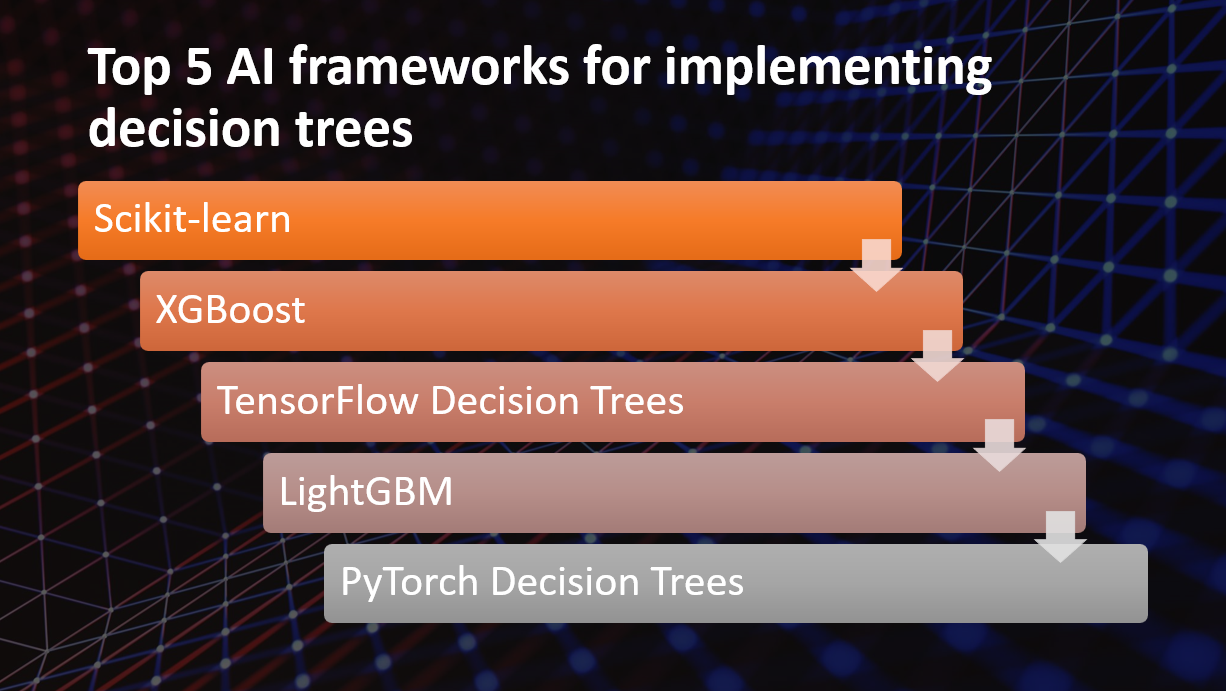

Here Are Our Top 5 AI frameworks for clustering similar data points:

1: scikit-learn

Overview and Importance

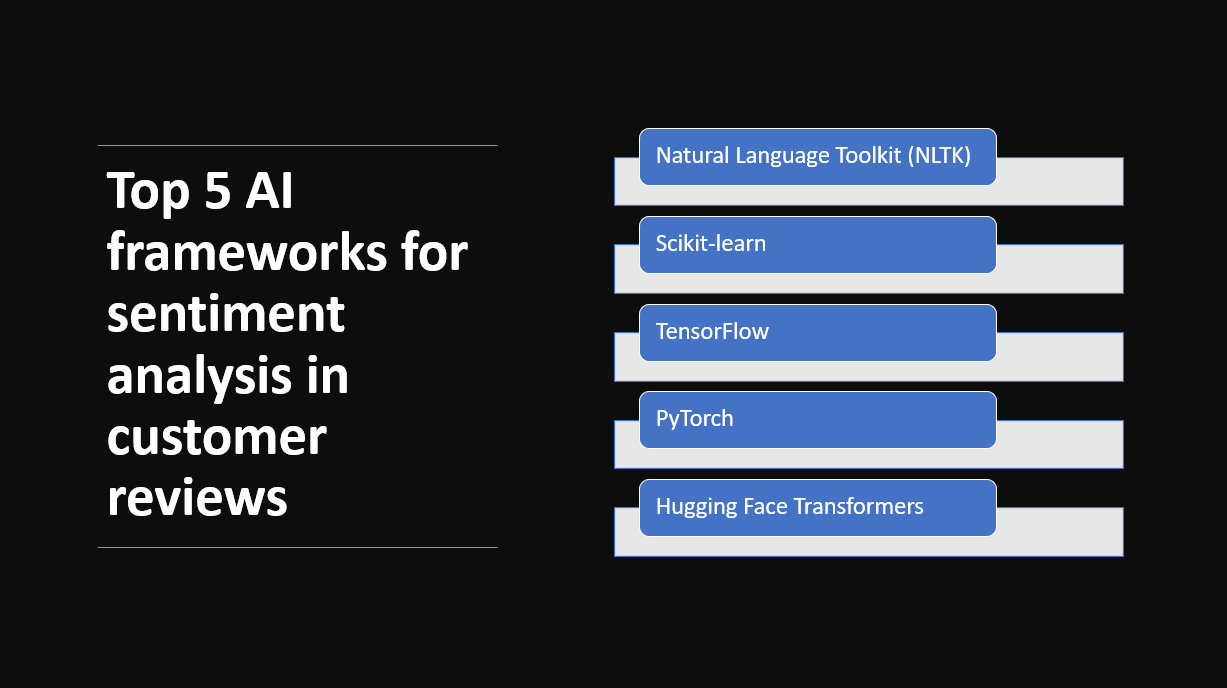

Scikit-learn is a prominent open-source AI framework in Python, known for its extensive support for machine learning tasks, including clustering similar data points. It has gained popularity due to its simplicity, versatility, and rich set of functionalities.

Key Features and Capabilities

Diverse Clustering Algorithms

- Scikit-learn offers various clustering algorithms, such as k-means, DBSCAN, and hierarchical clustering, providing users with multiple options to handle different types of data.

Customizable Parameters

- The library allows users to fine-tune clustering by adjusting parameters like the number of clusters, distance metrics, and linkage methods, enabling tailored solutions for specific datasets.

Evaluation Metrics

- Scikit-learn includes evaluation metrics like silhouette score and Davies-Bouldin index to assess clustering quality and aid in selecting the optimal clustering approach.

2: TensorFlow

Overview and Importance

TensorFlow is a powerful AI framework widely used for clustering similar data points. Developed by Google, TensorFlow provides a flexible and scalable platform for building machine learning models, including clustering algorithms. Its importance lies in enabling researchers and data scientists to efficiently implement and experiment with various clustering techniques, fostering advancements in unsupervised learning.

Key Features and Capabilities

High-Performance Computing

- TensorFlow's computational graph and efficient execution on both CPUs and GPUs allow for fast processing of large datasets, making it suitable for clustering tasks with high-dimensional data.

Extensive Library Support

- TensorFlow offers a vast array of pre-built functions and modules for implementing clustering algorithms, making it easier for developers to leverage state-of-the-art techniques without the need for extensive code writing.

Customizability

- With TensorFlow's flexible architecture, users can design and customize their clustering models, allowing them to tailor the algorithms to their specific use cases and data requirements.

Related Reading:

3: PyTorch

Overview and Importance

PyTorch is a popular AI framework that has gained significant traction in clustering similar data points. It is known for its dynamic computational graph, which provides flexibility and ease of use in building clustering models. PyTorch's intuitive interface and extensive community support make it a preferred choice for researchers and developers in the field of unsupervised learning.

Key Features and Capabilities

Dynamic Computational Graph

- Enables dynamic building and modification of models for experimenting with various clustering algorithms.

GPU Acceleration

- Supports faster model training and inference, crucial for clustering large datasets with high-dimensional features.

Extensive Library Support

- Provides a rich ecosystem of libraries and tools for streamlined clustering workflow, including data preprocessing, model evaluation, and visualization.

4: Keras

Overview and Importance

Keras is a popular AI framework widely used for clustering similar data points. It is valued for its user-friendly and intuitive API, enabling researchers and developers to quickly build and experiment with various clustering algorithms.

Key Features and Capabilities

User-Friendly API

- Keras provides a simple and easy-to-use interface, making it accessible to both beginners and experienced practitioners in the field of unsupervised learning.

Modularity

- Keras supports a modular approach to model building, allowing users to assemble individual layers into complex clustering architectures.

Backend Flexibility

- With the ability to run on various backends like TensorFlow or Theano, Keras offers flexibility and compatibility with different computational environments, enhancing its usability and versatility in clustering tasks.

Related Reading:

5: Apache Spark MLlib

Overview and Importance

Apache Spark MLlib is an essential component of the Apache Spark ecosystem, providing powerful machine learning capabilities, including clustering similar data points. Its distributed computing approach enables scalable and efficient processing of large datasets, making it a popular choice for big data analytics.

Learn more about Apache Spark MLlib

Key Features and Capabilities

Distributed Computing

- Apache Spark MLlib leverages the distributed computing capabilities of Apache Spark, making it suitable for handling big data and large-scale clustering tasks efficiently.

Variety of Clustering Algorithms

- MLlib provides various clustering algorithms, including k-means, Gaussian Mixture Model (GMM), and Bisecting k-means, offering flexibility in selecting the most appropriate algorithm for different clustering scenarios.

Integration with Spark Ecosystem

- MLlib seamlessly integrates with other Spark components, such as Spark SQL and Spark Streaming, enabling end-to-end data processing and machine learning workflows in Spark applications.

Conclusion

AI frameworks are significant for clustering similar data points, allowing businesses to identify patterns and gain valuable insights from their data. The top five AI frameworks for clustering are scikit-learn, TensorFlow, Keras, PyTorch, and Apache Spark MLlib.

Here are their key features, capabilities, and advantages:

scikit-learn: A versatile framework with various clustering algorithms, suitable for small to medium-sized datasets and providing easy implementation.

TensorFlow and Keras: Powerful deep learning frameworks that offer clustering capabilities through self-organizing maps (SOM) and other methods, suitable for large-scale datasets.

PyTorch: Provides flexibility and performance in clustering tasks, especially in research-oriented environments.

Apache Spark MLlib: A distributed framework that can handle large-scale datasets, enabling efficient clustering on big data.

Clustering has a significant impact on various aspects of data analysis:

Pattern recognition: Clustering helps in identifying groups of similar data points, revealing underlying patterns and structures in the data.

Data exploration: Clustering assists in data exploration, enabling businesses to understand the composition and characteristics of their datasets.

Data-driven decision-making: Insights from clustering facilitate data-driven decision-making, supporting strategic initiatives and improving business performance.

Businesses are encouraged to explore these AI frameworks and leverage their clustering algorithms. By applying clustering techniques to their data, businesses can identify patterns, discover hidden insights, and optimize processes. Clustering empowers businesses to make informed decisions and gain a competitive edge through data exploration and data-driven strategies.