Introduction

Speech recognition and transcription technologies have transformed the way we interact with computers and access information.

From voice assistants to transcription services, Artificial Intelligence (AI) platforms play a crucial role in enabling accurate and efficient speech-to-text conversion.

In this blog post, we will explore the top seven AI platforms for speech recognition and transcription, highlighting their capabilities, accuracy.

- AI platforms offer high accuracy in converting speech into written text.

- AI platforms process speech data quickly, providing real-time or near-real-time transcription.

- AI platforms can handle large volumes of speech data efficiently.

- AI platforms support multiple languages for speech recognition and transcription.

- Some AI platforms allow for customization to adapt models to specific domains or accents.

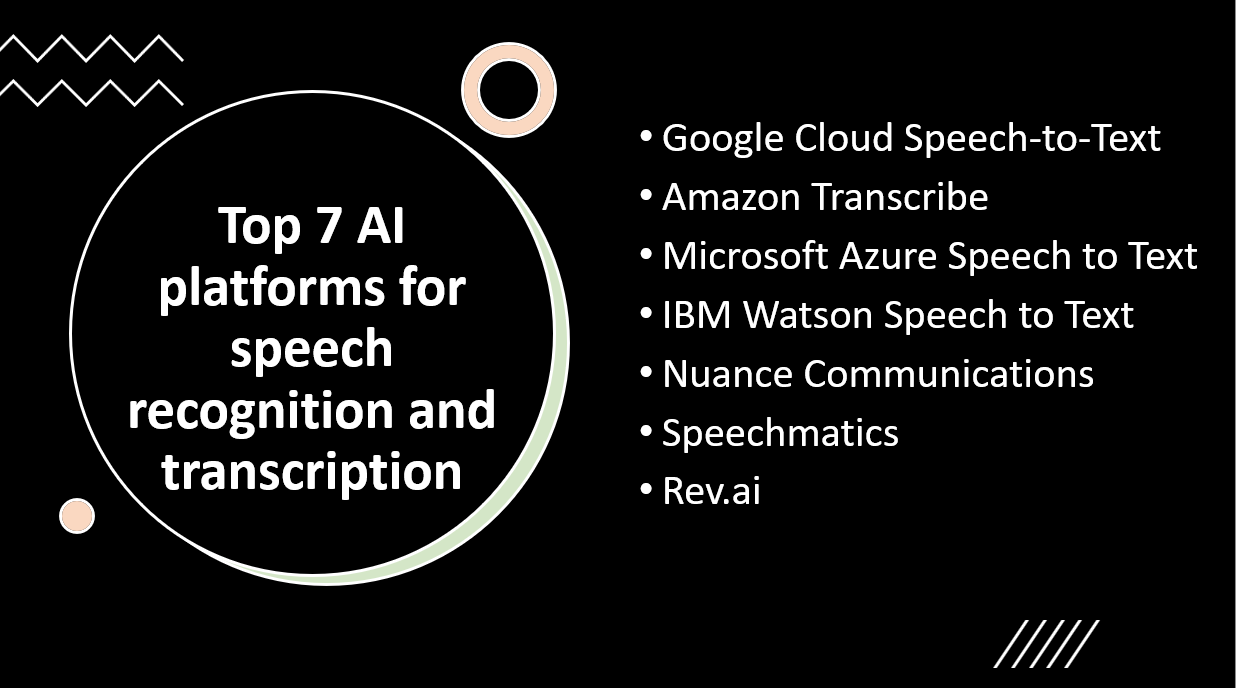

Here Are Our Top 7 AI platforms for speech recognition and transcription:

1: Google Cloud Speech-to-Text

Overview and Importance

Google Cloud Speech-to-Text is a leading AI platform developed by Google that provides powerful speech recognition and transcription capabilities. It plays a crucial role in converting spoken language into written text, enabling a wide range of applications in various industries.

Learn more about Google Cloud Speech-to-Text

Key Features and Capabilities

Google Cloud Speech-to-Text offers several key features and capabilities:

Real-time and batch transcription

- It can process both live streaming audio and pre-recorded audio files for transcription, allowing for immediate or offline transcription needs.

Noise handling

- It can effectively handle noisy audio environments, making it suitable for transcription in various real-world scenarios.

Automatic punctuation

- The platform can automatically insert punctuation marks, enhancing the readability and structure of the transcribed text.

Speaker diarization

- It can identify and differentiate between multiple speakers in an audio file, enabling the separation of individual voices and facilitating speaker-based analysis.

2: Amazon Transcribe

Overview and Importance

Amazon Transcribe is an AI-powered speech recognition service offered by Amazon Web Services (AWS). It enables businesses to convert speech into written text, providing accurate and efficient transcription capabilities. With its scalability and advanced machine learning algorithms, Amazon Transcribe plays a significant role in various industries that require speech-to-text conversion.

Learn more about Amazon Transcribe

Key Features and Capabilities

Amazon Transcribe offers several key features and capabilities:

Automatic speech recognition

- It accurately transcribes spoken language into written text, capturing nuances and context.

Real-time and batch processing

- It supports both streaming audio for real-time transcription and pre-recorded audio files for batch processing, accommodating diverse use cases.

Custom vocabulary and language models

- Users can customize vocabulary to ensure accurate transcription of industry-specific terms, jargon, or unique languages.

Channel identification

- It can identify and label different channels within an audio source, making it useful for scenarios with multiple speakers or complex audio setups.

Speaker identification

- It can recognize and distinguish between different speakers in a conversation, enabling speaker-specific transcription and analysis.

3: Microsoft Azure Speech to Text

Overview and Importance

Microsoft Azure Speech to Text is an AI-based service offered by Microsoft Azure that provides speech recognition capabilities. It allows users to convert spoken language into written text, enabling applications to process and analyze audio content more effectively. Azure Speech to Text plays a crucial role in various industries where accurate and efficient speech recognition is essential.

Learn more about Microsoft Azure Speech to Text

Key Features and Capabilities

Microsoft Azure Speech to Text offers several key features and capabilities:

Automatic speech recognition

- It uses advanced machine learning models to transcribe spoken words into written text accurately.

Real-time and batch processing

- It supports both real-time transcription for streaming audio and batch processing for pre-recorded audio files, catering to diverse use cases.

Customization options

- Users can customize language models, acoustic models, and vocabularies to improve recognition accuracy for specific domains, dialects, or terminology.

Speaker diarization

- It can identify and differentiate between multiple speakers in a conversation, attributing spoken content to specific individuals.

Language support

- It supports a wide range of languages, allowing users to transcribe content in various linguistic contexts.

4: IBM Watson Speech to Text

Overview and Importance

IBM Watson Speech to Text is an AI-based service that offers speech recognition capabilities. It enables users to convert spoken language into written text, facilitating the analysis and understanding of audio content. IBM Watson Speech to Text plays a significant role in various industries where accurate and efficient speech recognition is crucial.

Learn more about IBM Watson Speech to Text

Key Features and Capabilities

IBM Watson Speech to Text provides several key features and capabilities:

Accurate transcription

- It utilizes deep learning techniques and advanced algorithms to accurately transcribe spoken words into written text.

Real-time and batch processing

- It supports both real-time transcription for streaming audio and batch processing for pre-recorded audio files, accommodating a wide range of use cases.

Customization options

- Users can create custom language models to improve recognition accuracy for specific domains, dialects, or vocabulary.

Multilingual support

- It supports a variety of languages, allowing users to transcribe content in different linguistic contexts.

Speaker diarization

- It can identify and differentiate between multiple speakers, attributing spoken content to specific individuals.

5: Nuance Communications

Overview and Importance

Nuance Communications is a leading provider of speech and imaging solutions. Their speech recognition technology focuses on understanding and transcribing human speech, making it an important player in the field of speech-to-text conversion. With a strong emphasis on natural language understanding and accuracy, Nuance Communications' solutions have significant importance in various industries.

Learn more about Nuance Communications

Key Features and Capabilities

Nuance Communications offers a range of key features and capabilities in their speech recognition solutions:

Accurate speech recognition

- Nuance's advanced algorithms and models ensure high accuracy in transcribing spoken language into text, even in challenging audio environments.

Natural language understanding

- Their technology goes beyond simple speech recognition and incorporates natural language processing techniques to understand the meaning and context of spoken words.

Speaker identification

- Nuance can identify and distinguish between multiple speakers, allowing for speaker diarization and attribution of speech to specific individuals.

Language support

- Their solutions cover a wide range of languages, enabling transcription and analysis in different linguistic contexts.

Customization and adaptation

- Nuance provides tools and capabilities for customizing language models and adapting them to specific domains or vocabularies.

6: Speechmatics

Overview and Importance

Speechmatics is an AI-driven speech recognition and transcription technology provider. Their platform specializes in converting spoken language into written text with high accuracy and speed. With a focus on multilingual and real-time transcription capabilities, Speechmatics plays a significant role in the field of speech-to-text conversion.

Learn more about Speechmatics

Key Features and Capabilities

Speechmatics offers several key features and capabilities in their speech recognition solutions:

Multilingual support

- Speechmatics supports a wide range of languages, allowing for transcription and analysis in diverse linguistic contexts.

Real-time transcription

- Their technology enables real-time speech recognition and transcription, making it suitable for live events, customer support, and other time-sensitive applications.

Speaker diarization

- Speechmatics can identify and differentiate between multiple speakers, providing speaker attribution and segmentation of transcribed text.

Customization and adaptation

- The platform offers tools for creating custom language models and adapting them to specific domains, vocabularies, and speaker profiles.

High accuracy and scalability

- Speechmatics leverages state-of-the-art AI techniques to achieve high transcription accuracy, even in challenging audio environments. Their solutions are designed to handle large-scale transcription tasks with high scalability.

7: Rev.ai

Overview and Importance

Rev.ai is an AI-powered automatic speech recognition (ASR) platform provided by Rev.com, a leading transcription and captioning service. The platform leverages advanced machine learning algorithms to convert spoken language into accurate written text. Rev.ai is known for its speed, accuracy, and flexibility, making it a valuable tool for a wide range of applications.

Learn more about Rev.ai

Key Features and Capabilities

Rev.ai offers several key features and capabilities in its automatic speech recognition platform:

High accuracy

- The platform utilizes state-of-the-art ASR technology to achieve industry-leading accuracy in transcribing spoken language, even in challenging audio environments.

Real-time transcription

- Rev.ai supports real-time transcription, enabling users to receive instant written text as speech is being spoken.

Customization

- The platform allows users to train custom language models to adapt the transcription to specific domains, vocabularies, and accents, improving accuracy for specialized content.

Speaker diarization

- Rev.ai can differentiate between multiple speakers, providing speaker attribution and segmentation in the transcribed text.

- The platform includes features to add timestamps and formatting options to the transcriptions, enhancing readability and usability.

Conclusion

AI platforms for speech recognition and transcription play a significant role in converting spoken language into accurate and usable written text. These platforms utilize advanced machine learning algorithms to analyze and understand audio data, enabling various industries to extract valuable insights and enhance accessibility.

Recapping the top seven AI platforms for speech recognition and transcription:

Google Cloud Speech-to-Text: Offers real-time and batch transcription, noise handling, and extensive language support. It is known for its accuracy and scalability.

Amazon Transcribe: Provides automatic speech recognition with features like real-time transcription, speaker identification, and punctuation. It offers high accuracy and supports multiple languages.

Microsoft Azure Speech to Text: Enables real-time transcription, speaker diarization, and noise cancellation. It is customizable and integrates well with other Azure services.

IBM Watson Speech to Text: Offers accurate transcription with customization options for specific domains and supports multiple languages. It provides speaker diarization and punctuation features.

Nuance Communications: Known for its accuracy and advanced language modeling capabilities. It supports real-time and offline transcription, speaker identification, and customizable language models.

Speechmatics: Provides high-quality transcription with real-time and batch processing options. It supports multiple languages and offers customization for specific vocabularies and accents.

Rev.ai: Offers high accuracy, real-time transcription, and customization options. It supports speaker diarization, timestamps, and formatting. It finds applications in media, market research, call centers, accessibility, and content creation.

AI platforms for speech recognition and transcription offer features like high accuracy, real-time transcription, customization, and multi-language support. They bring improved efficiency, accuracy, and accessibility to various industries. Users should explore these platforms based on their specific needs. By choosing the right AI platform, businesses can convert spoken language into written text accurately, enabling better data analysis, accessibility, and informed decision-making.